Project Description

ArchiVision - AI Extended Metadata + AI Assistive Technology for Archive Images

Problem Definition

Government image archives, like those in ACT Memory and the Public Record Office of Victoria, currently face challenges related to searchability, accessibility, and usefulness.

Images in their raw form are not easily organised for quick searching. Their metadata is limited to basic details such as their ID, title, the box/folder/series they're part of, and other physical traits. Conducting searches based on these basic fields is challenging.

Adding context to images through manual tagging is a cumbersome task. Human involvement is necessary to view the images and rely on their skills to accurately provide relevant words.

Image archives are currently delivered only with images and text on screen, catering only to users with good eyesight. This raises concerns about Environmental, Social, and Governance (ESG) considerations due to the exclusion of the visually impaired population from accessing them.

Our Solution

To address these challenges, we developed ArchiVision, a prototype for an AI Extended Metadata and AI Assistive Technology system to enhance archive images.

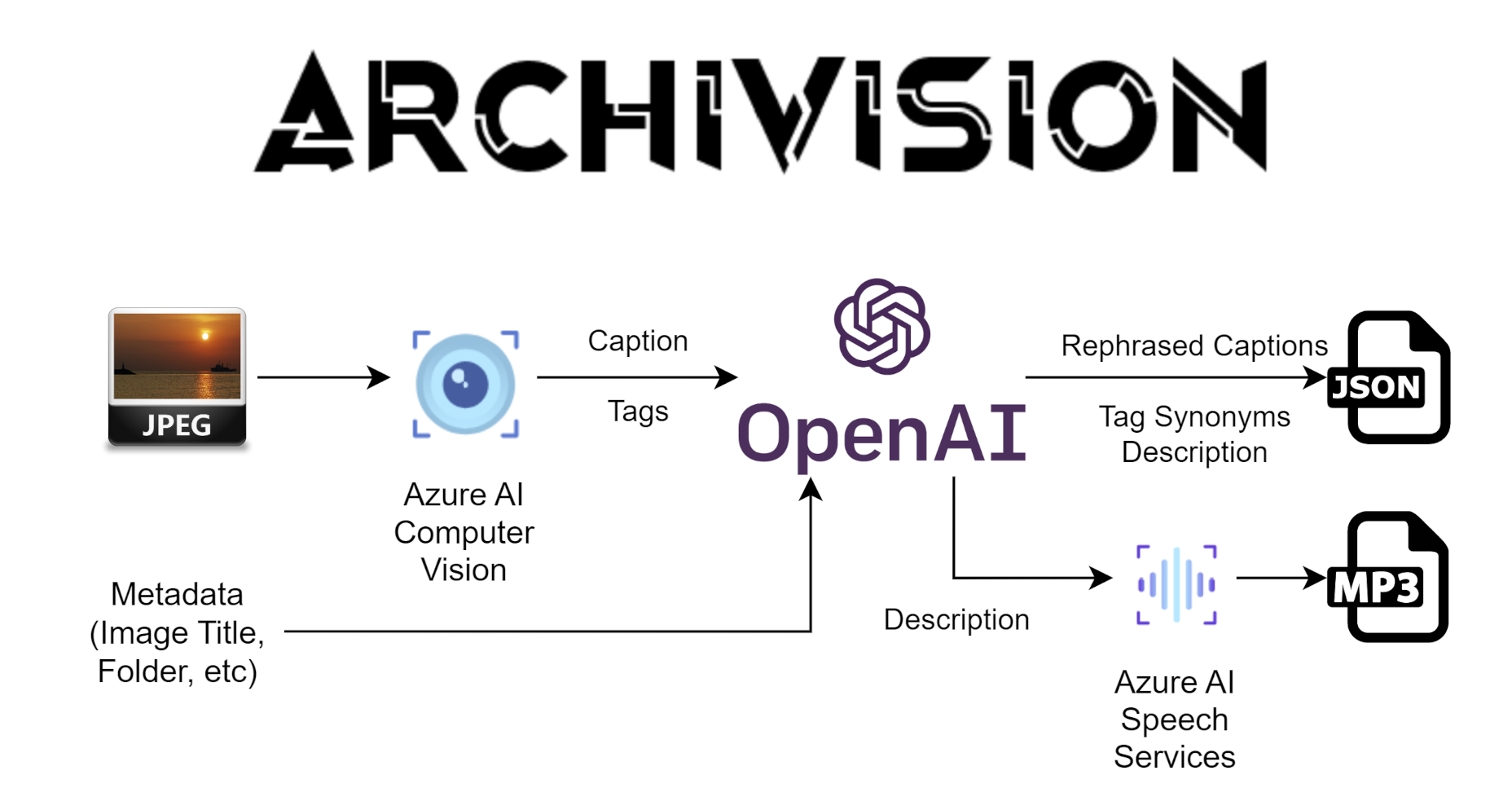

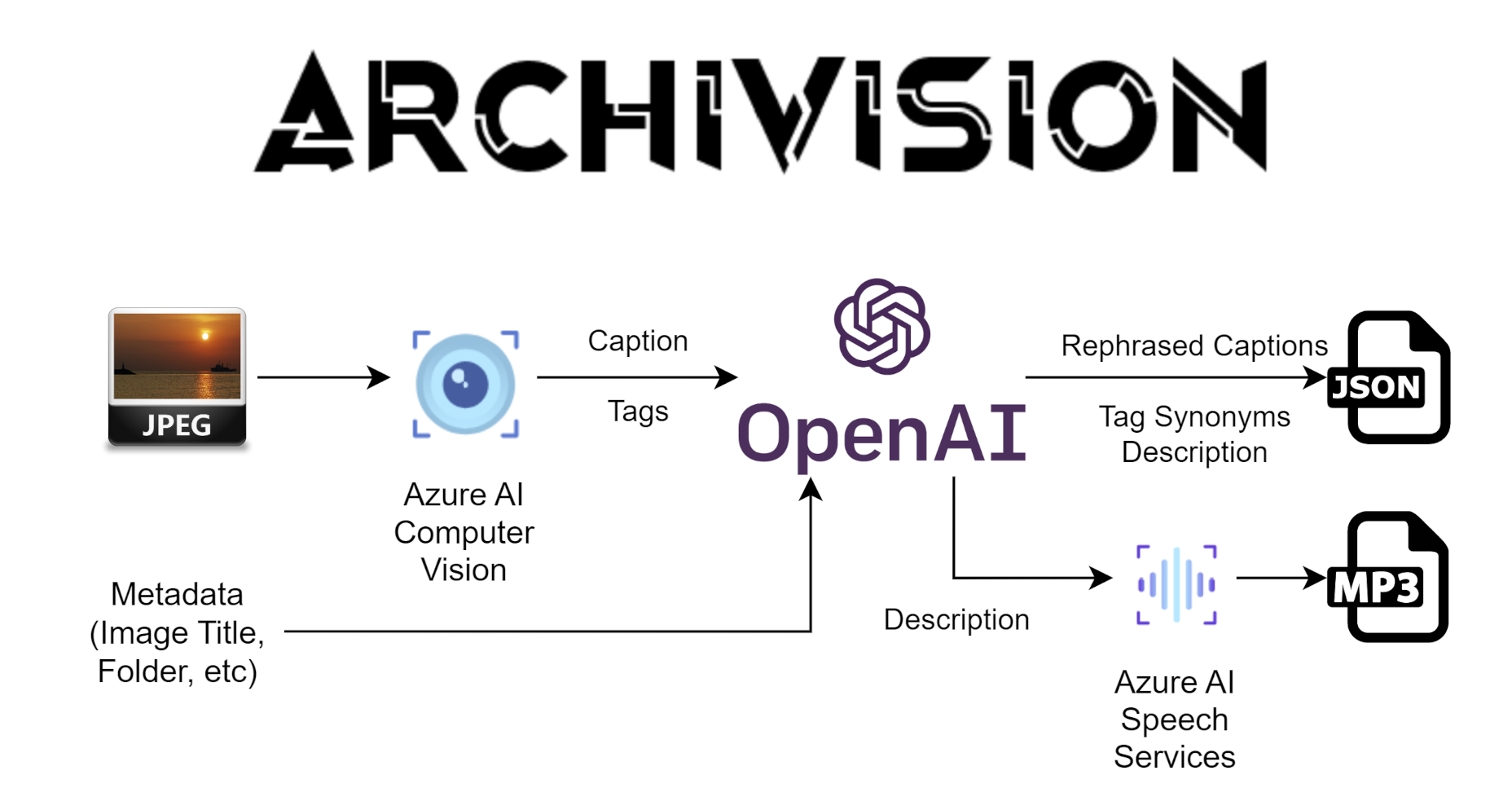

Through a range of AI techniques, ArchiVision produces metadata in JSON format that can be integrated into archival databases. Additionally, it generates audio MP3 files, which can be employed alongside images to provide assistance to visually-impaired users.

ArchiVision uses a CSV file as input, containing image IDs, titles, and URLs. The image is first processed by Azure AI Vision service to create captions and tags. The resulting output, along with basic metadata such as its title, is then forwarded to Open AI's Large Language Model (LLM) to generate additional metadata, including rephrased captions, tag synonyms, and descriptions. These are stored in a JSON file, and the description is then sent to Azure AI Speech to create an audio MP3.

Benefits

Improved Searchability: The generated tags, captions, and descriptive text serve as metadata for each image. When users search for specific keywords or phrases, the search engine can utilize this metadata to provide more accurate search results. This makes it easier for users to find the images they're looking for.

Keyword Search: Users can enter keywords related to the content they are searching for, and the AI-generated tags can serve as potential search terms. This expands the range of search terms users can use, even if they might not have thought of those terms themselves.

Contextual Understanding: The generated captions and descriptive text provide context about the image's content. This is especially valuable when users are looking for images with specific themes, settings, or scenarios. The system's descriptive text can help users understand the content of the image without needing to view it, which saves time.

Browsing and Filtering: Users can navigate the image archive by browsing through thumbnails or previews. The generated captions and tags can help users quickly identify images of interest, allowing them to filter through the collection more efficiently.

Accessibility: Descriptive text converted to audio can aid visually impaired users who wouldn't otherwise be able to consume the images.

Time Savings: Users won't need to spend as much time manually tagging images. The system automates this process, saving valuable time and effort.

Limitations

ArchiVision faces limitations with generic models like that of Azure AI Vision. These models, designed for diverse images, may not grasp nuances in specific contexts, like vintage photo captions. Black and white images also lead to more errors compared to colored images, resulting in misidentified shapes. Training on vintage datasets could help, but challenges such as diverse styles and image quality persist. Currently, human verification may still be needed.

Prompt engineering is a developing field, and it occasionally yields unexpected message structures. For instance, OpenAI might not always adhere to the natural language instruction of providing CSV output, and instead, it might return a numbered list.

Data Story

By design, the input data for this solution should be restricted to fundamental elements like the image itself and basic details such as the title, folder/container, and date.

The image is used as input for Azure AI Vision. ArchiVision uses two of Azure AI Vision API requests:

- describe: returns a brief phrase

- tag: returns a group of tags along with a confidence level (a score between 0 and 1)

Note that Computer Vision algorithms are optimised for precision, resulting in concise and straightforward captions, as well as a restricted number of tags.

With prompt engineering, the image title, caption, and tags, are inserted into chat messages sent to the Open AI Large Language Model. ArchiVision sends three separate chat messages to generate extended metadata:

- Rephrased Captions: "generate csv of 10 rephrases in double quotes:[caption]"

- Tag Synonyms: "[tags]: generate 10 synonyms for each tag in CSV in double quotes"

- Description: "turn these into simple 2 to 3 sentences to describe a single image:[title], [caption]"

These are then collected to output a JSON file with the following elements:

- ID (Input)

- Title (Input)

- URL (Input)

- Caption (Computer Vision Output)

- Rephrased Captions List (LLM Output)

- Description (LLM Output)

- Tags List (Computer Vision Output)

- Tag Synonyms (LLM Output)

- Raw Output (results from OpenAI, for reference)

The description is also passed on to Azure AI Speech to generate an audio MP3.